Hey there, I recently tried to get some info out of my Zabbix instance to use in another context and therefore had a look at Zabbix’ API.

Turns out, it is quite simple to use and works with JSON messages.

Communication flow is pretty simple:

- Send username and password to the API

- Retrieve Auth-Token from API

- Send your actual query to the API and append the Auth-Token

- Retrieve queried data from API

To test communication, I wrote a simple PHP script to fiddle around with the possibilities of the API:

<?php

/*

_ _ _

______ _| |__ | |__ (_)_ __

|_ / _` | '_ \| '_ \| \ \/ /

/ / (_| | |_) | |_) | |> <

/___\__,_|_.__/|_.__/|_/_/\_\ - API PoC

2012, looke

*/

$uri = "https://zabbix.foo.bar/api_jsonrpc.php";

$username = "testuser";

$password = "xyz";

function expand_arr($array) {

foreach ($array as $key => $value) {

if (is_array($value)) {

echo "<i>".$key."</i>:<br>";

expand_arr($value);

echo "<br>\n";

} else {

echo "<i>".$key."</i>: ".$value."<br>\n";

}

}

}

function json_request($uri, $data) {

$json_data = json_encode($data);

$c = curl_init();

curl_setopt($c, CURLOPT_URL, $uri);

curl_setopt($c, CURLOPT_CUSTOMREQUEST, "POST");

curl_setopt($c, CURLOPT_RETURNTRANSFER, true);

curl_setopt($c, CURLOPT_POST, $json_data);

curl_setopt($c, CURLOPT_POSTFIELDS, $json_data);

curl_setopt($c, CURLOPT_HTTPHEADER, array(

'Content-Type: application/json',

'Content-Length: ' . strlen($json_data))

);

curl_setopt($c, CURLOPT_SSL_VERIFYPEER, false);

$result = curl_exec($c);

/* Uncomment to see some debug info

echo "<b>JSON Request:</b><br>\n";

echo $json_data."<br><br>\n";

echo "<b>JSON Answer:</b><br>\n";

echo $result."<br><br>\n";

echo "<b>CURL Debug Info:</b><br>\n";

$debug = curl_getinfo($c);

echo expand_arr($debug)."<br><hr>\n";

*/

return json_decode($result, true);

}

function zabbix_auth($uri, $username, $password) {

$data = array(

'jsonrpc' => "2.0",

'method' => "user.authenticate",

'params' => array(

'user' => $username,

'password' => $password

),

'id' => "1"

);

$response = json_request($uri, $data);

return $response['result'];

}

function zabbix_get_hostgroups($uri, $authtoken) {

$data = array(

'jsonrpc' => "2.0",

'method' => "hostgroup.get",

'params' => array(

'output' => "extend",

'sortfield' => "name"

),

'id' => "2",

'auth' => $authtoken

);

$response = json_request($uri, $data);

return $response['result'];

}

$authtoken = zabbix_auth($uri, $username, $password);

expand_arr(zabbix_get_hostgroups($uri, $authtoken));

?>

If everything worked, the scripts output should look something like this:

0:

groupid: 5

name: Discovered Hosts

internal: 1

1:

groupid: 2

name: Linux Servers

internal: 0

2:

groupid: 7

name: NAS

internal: 0

3:

groupid: 6

name: Routers

internal: 0

4:

groupid: 3

name: Windows Servers

internal: 0

5:

groupid: 4

name: Zabbix Servers

internal: 0

Important

Authentication method is user.authenticate and NOT user.login as mentioned in the manual.

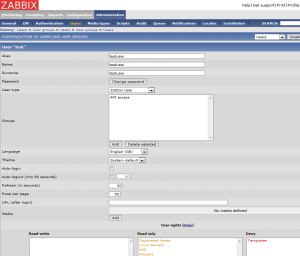

Setting up a Zabbix user with API access

Additional info

http://www.zabbix.com/documentation/1.8/api/getting_started